<source : unsplash>一、前言

檢視自己的學習成效,最好的方式就是自我測驗。在學完SVM後,你可能也會和我一樣好奇,對於SVM的知識到底掌握多少呢?

因此,接下來的文章將會引用<25 Questions to test a Data Scientist on Support Vector Machines>文章中的題目,檢測我對於SVM的了解程度,同時也紀錄各題目的知識筆記。歡迎你一起來檢測自己對SVM了解的程度。

二、題目說明與解析

Question1.

Suppose you are using a Linear SVM classifier with 2 class classification problem. Now you have been given the following data in which some points are circled red that are representing support vectors.

<source : analyticsvidhya>

1) If you remove the following any one red points from the data. Does the decision boundary will change?

A) Yes

B) No

解答:

A

說明:

由於SVM的決策邊界是由最鄰近該邊界的訓練樣本所決定。以上圖為例,該如何分割才能完美將"+" 和 "-" 的資料分類呢?你應該會從中間畫一條線就,將 "+" 和 "-" 的資料分開。

而你是否發現,圖上圈起來的正是離你所畫的線(決策邊界)最近的樣本呢?因此,如果鄰近決策邊界的訓練資料點位置改變,決策邊界也會隨之改變。

Question2.

2) [True or False] If you remove the non-red circled points from the data, the decision boundary will change?

解答:

B

說明:

由於SVM的決策邊界是由最鄰近該邊界的訓練樣本所決定,所以移動非靠近決策邊界的訓練樣本並不會改變決策邊界。

Question3.

3) What do you mean by generalization error in terms of the SVM?

A) How far the hyperplane is from the support vectors

B) How accurately the SVM can predict outcomes for unseen data

C) The threshold amount of error in an SVM

解答:

B

說明:

所謂 generalization error (適應性誤差,又有人翻譯成泛化誤差) 在統計學上的意義是樣本外誤差。這是一種模型在未知的新資料上預測準確性的度量。

Question4.

4) When the C parameter is set to infinite, which of the following holds true?

A) The optimal hyperplane if exists, will be the one that completely separates the data

B) The soft-margin classifier will separate the data

C) None of the above

解答:

A

說明:

"Regularization parameter. The strength of the regularization is inversely proportional to C. Must be strictly positive. The penalty is a squared l2 penalty." 這是 Scikit-learn 文件中對於 C 參數的說明。

SVM中的 C 參數,是正規化參數 λ 的倒數,作為控制錯誤分類的懲罰,也就是控制邊界的寬度。如下圖所示,當C越大,對應越大的錯誤分類懲罰,邊界寬度越小,越容易將數據完美分類,但是這也意味著容易 Overfitting (過擬合)。

<source : Support Vector Machine Errors>

Question5.

5) What do you mean by a hard margin?

A) The SVM allows very low error in classification

B) The SVM allows high amount of error in classification

C) None of the above

解答:

A

說明:

Hard Margin (硬間隔) 表示SVM對分類正確性的要求非常嚴格,所以模型會盡力在訓練資料集上表現的更好,這也意味著容易Overfitting(過擬合)。

Question6.

6) The minimum time complexity for training an SVM is O(n²). According to this fact, what sizes of datasets are not best suited for SVM’s?

A) Large datasets

B) Small datasets

C) Medium sized datasets

D) Size does not matter

解答:

A

說明:

當SVM模型的最小時間複雜度O(n²),資料集數量越大,計算時間越可觀。所以大資料集並不適合使用SVM。

Question7.

7) The effectiveness of an SVM depends upon:

A) Selection of Kernel

B) Kernel Parameters

C) Soft Margin Parameter C

D) All of the above

解答:

D

說明:

以上3點都會影響到SVM的表現,所以要透過不斷測試來尋找最佳的參數組合,在提高效率、減少誤差與避免過擬合中找到平衡。

Question8.

8) Support vectors are the data points that lie closest to the decision surface.

A) TRUE

B) FALSE

解答:

A

說明:

SVM的決策邊界是由最鄰近該邊界的訓練樣本所決定。

Question9.

9) The SVM’s are less effective when:

A) The data is linearly separable

B) The data is clean and ready to use

C) The data is noisy and contains overlapping points

解答:

C

說明:

SVM適用於資料是明顯可分的情況下。若已知資料有重疊現象,要想得到一個清晰的分類超平面非常困難。

Question10.

10) Suppose you are using RBF kernel in SVM with high Gamma value. What does this signify?

A) The model would consider even far away points from hyperplane for modeling

B) The model would consider only the points close to the hyperplane for modeling

C) The model would not be affected by distance of points from hyperplane for modeling

D) None of the above

解答:

B

說明:

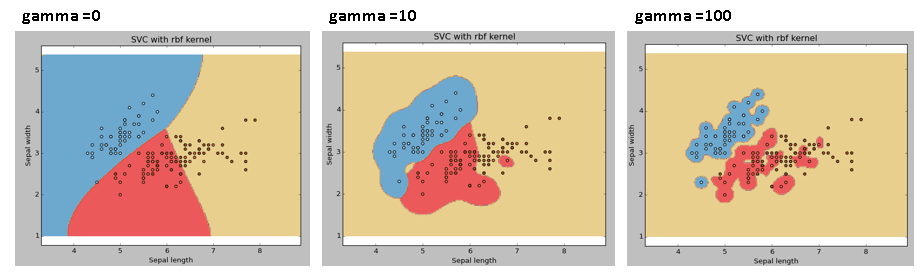

SVM中的gamma參數,是核函數的系數,伴隨著 kernel rbf 出現,表示接近或遠離超平面的點的影響。如下圖所示,gamma值的大小,決定訓練樣本的影響性。

gamma值越小,決策邊界的變化較小,,模型將過於受限,無法包含訓練數據集的所有點,而無法真正捕獲形狀。

gamma值越大,會增加訓練樣本的影響性,導致產生一個較緊、顛簸的決策邊界,模型將很好地捕獲數據集的形狀。預設值是 1/k (k為特徵數)。

<source : Support Vector Machine — Introduction to Machine Learning Algorithms>

Question11.

11) The cost parameter in the SVM means:

A) The number of cross-validations to be made

B) The kernel to be used

C) The tradeoff between misclassification and simplicity of the model

D) None of the above

解答:

C

說明:

代價參數即錯誤分類的成本,決定SVM擬合(學習)數據的程度。當C較小時,追求較平滑的決策邊界;當C較大時,追求正確分類更多資料點。

<source : Support Vector Machine Errors>

Question12.

12)

Suppose you are building a SVM model on data X. The data X can be error prone which means that you should not trust any specific data point too much. Now think that you want to build a SVM model which has quadratic kernel function of polynomial degree 2 that uses Slack variable C as one of it’s hyper parameter. Based upon that give the answer for following question.

What would happen when you use very large value of C(C->infinity)?

Note: For small C was also classifying all data points correctly

A) We can still classify data correctly for given setting of hyper parameter C

B) We can not classify data correctly for given setting of hyper parameter C

C) Can’t Say

D) None of these

解答:

A

說明:

若參數C的值很大,說明誤分類的懲罰項非常大,優化的目標應該是讓分類超平面儘量將所有的資料點都正確分類。

Question13.

13) What would happen when you use very small C (C~0)?

A) Misclassification would happen

B) Data will be correctly classified

C) Can’t say

D) None of these

解答:

A

說明:

如下圖所示,因為錯誤分類的懲罰項非常小,邊界越大,越可能有部分點會出現分類錯誤現象。

<source : Support Vector Machine Errors>

Question14.

14) If I am using all features of my dataset and I achieve 100% accuracy on my training set, but ~70% on validation set, what should I look out for?

A) Underfitting

B) Nothing, the model is perfect

C) Overfitting

解答:

C

說明:

模型對於訓練資料集的準確率高,但對於測試資料集的準確率卻低,這就是所謂的 Overfitting (過擬合)。可透過增加數據量或是簡化模型來解決Overfitting (過擬合)的問題。

Question15.

15) Which of the following are real world applications of the SVM?

A) Text and Hypertext Categorization

B) Image Classification

C) Clustering of News Articles

D) All of the above

解答:

D

說明:

SVM是具有高度通用的模型,幾乎可用於現實世界的所有問題,包括回歸,聚類和手寫識別。

恭喜你完成了 15 道題目,從錯誤中學習,對於知識的掌握會更加熟悉。加油喔,還有10題,相信你一定能順利完成。

下一篇:考驗你對 SVM (支持向量機) 了解多少呢?試試看這25道題吧!(下)

---

參考資料:

![[PLC基礎程式實作筆記] 快速理解自保持狀態 & 程式實作](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEjp5L53TvWtTUbakp9zbSS96WyhIQqdGRo9aYZNgc_kJ2U56kw-NNzgkNmKunFi7tS9XQDX7miUsmxvhaOkl56pUR7YsvlSJPwVxYRILiyYrnLT8YQgc3pSkfuc81yC4QwzZv-CV4MM7CcU/w180/%25E8%2587%25AA%25E4%25BF%259D%25E6%258C%2581.PNG)

![[除錯救星] Code::Blocks >> can't find compiler executable in your configured search path's for gnu gcc compiler](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEilxbKOd7yAz3lyOBpMI3jgwZPOkXhtC8xsE_lCc1F0zy3ByRvfrRHR29hacD1LKRNwohxXDty0_aqtdjMQqfpTm1rGNNs-PsOzRVO9gh-2jbE0FwXRjBqVm9sYuevUPX4bVtBxlggJA2z9/w180/error.PNG)

![[ LabVIEW for Arduino ] 6 個步驟,輕鬆完成LabVIEW for Arduino的環境建置](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEjtMcc0F0liYVKhv18wsRi9LDRkwEb8GT_TodHixFZaLVKpFtZJz2K6sF4FwQugWfrLx-kKp7lZ8Xe2jQJTnR5iKY1Ek7XgEYoiCNkt9ghXtJEf0FqABo0Nkeu5aQWnle5lhy30u4ZDvAAp/w180/harrison-broadbent-fZB51omnY_Y-unsplash.jpg)

![[ROS SLAM系列] ROS Melodic中的ORB SLAM2環境建置 與 Stereo SLAM實現](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEilMu37F7nOmxEaj6s9GC6iq224_heJEzcO7Z3eF6I1F7wcymoDT_kSNgEr820lrnI2PQ0LmRgF7ywZvEQlu1SrE4OODGvrTVBhdt7hldKIMeYt4kCvMGnRzeZF8sPpQq56fmVreidJyPs4/w180/23.PNG)

![[OpenCV筆記] 12. 傅立葉轉換實作](https://blogger.googleusercontent.com/img/a/AVvXsEhGtpZu-ujaPIotGxwEygKsrWYP4-s0g-9E3sgef1SZg9X4YdXBK0LIOrFA3usC0jrNbIPGHLr59fTC3DYgTjZZ1xb2XirqydrTWbuou_PfrrxeX4hnZAw8LSiVyCmeT0nVnKiiG_iat_Vt1c18CXY-swzQs6VyOVj0I5bUgL1546G6P2zkItLOfOQDiw=w180)

![[LabVIEW程式技巧] 整合練習 : 口罩販賣機軟體設計](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEhyhoWfBATSpHetTVdhWTksg3LKJoKtBaA-N9BvrQs8tBo1Q-U7OqZJUV_XvnMlm-qg8v4DDSd0ItZnqs0lKeRBxHHZ8jfKuQzZtQNasziY3B9oPKE-7rJ0Tuxc69ZEgqZ2wW4r5Z4LsUyK/w180/frontpanel.PNG)

![[matlab練習] 實作離散系統之單位步階響應 (差分方程與轉移函數)](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEh793ICAgWLoYehhlcbBRSw9oeK3UbxbuUw-8Re5zxKaMGi91jQPXIHnv2QEYGdVh9Oiq-Ci3enPgcp2d8ym-s90u-p6U8opC548ZyOLDPTxMD58b3fEvCI17yMFt0-WozpcSBr29ww3V56/w180/1.PNG)

![[ Arduino之Ardublock實作筆記 ] 遙控車控制實作 (整合藍芽與紅外線接收模組)](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEgPaC8gnfZqTjBKgCxfzpCimm67LXth4S4ZZ06RE7TVQToinUB8pgXYSVdtEy0oW-wzXgKc3EcuDIyW1Lcxwi5J-k7RrjOCiutqRrHbo5TLHSeuhoAy9Mv5FEmlQrKNcSjczq62Q2ie73co/w180/IMG_20200715_110132.jpg)

0 留言